dbt Demystified: A Practical Guide for Infrastructure Engineers

Data transformation, SQL, Models... Oh my.

Today I’m going to take a look at dbt: “the industry standard for data transformation”.

As an infrastructure engineer working on a large data platform, I see data engineers and analysts writing DAGs that schedule dbt commands but I couldn’t tell you what those dbt commands actually do.

dbt has become such a widely-used tool that I figured it’s worth doing a deep dive and learning about its history, what problems it solves, the core concepts, and the features that make it such a popular tool.

I’m writing this for people who are curious about dbt or just want to learn more about data platform tools. Whether you work on infra, like myself, or a data engineer or analyst, I hope you will find this post helpful in understanding dbt and where it fits in within a data platform.

First, let’s make sure we understand what “data transformation” means.

Data Transformation

dbt is the self-proclaimed industry standard for data transformation. I like to think of data transformation as the process of turning raw data into something useful.

An example of raw data could be a huge log file from an e-commerce website containing every single user click, page view, and transaction timestamp. This is known as “clickstream data”.

Imagine you are a business analyst trying to figure out sales trends for your company, and you see this:

2025-05-18T09:23:47.128Z,user_592781,page_view,product_page,sku_12458,mobile_android,referrer=google,session_id=a7f92c3d1

2025-05-18T09:23:51.654Z,user_592781,product_click,sku_12458,mobile_android,session_id=a7f92c3d1

2025-05-18T09:24:03.211Z,user_592781,add_to_cart,sku_12458,quantity=1,mobile_android,session_id=a7f92c3d1

2025-05-18T09:24:18.976Z,user_287456,page_view,homepage,desktop_chrome,referrer=direct,session_id=b2e45f8a2

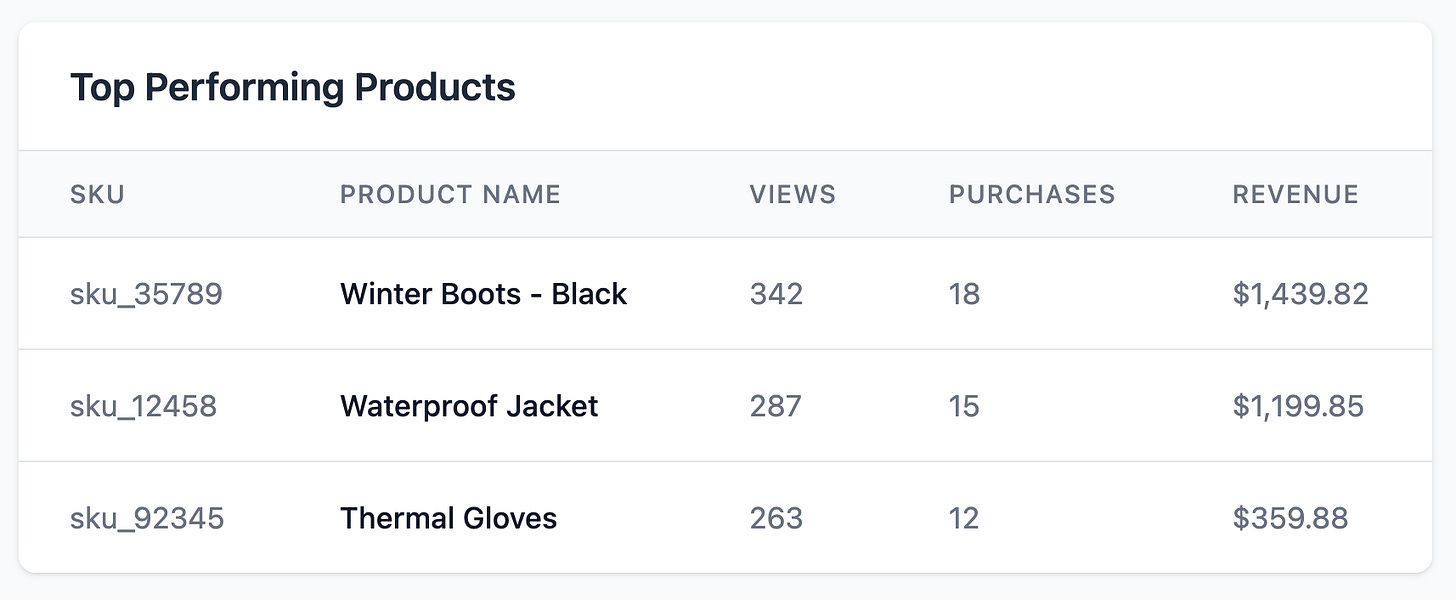

... this is just a snippet, there are hundreds of thousands of similar linesThis raw data is not very useful by itself. But through data transformation, you can create useful information from these logs, such as the top performing products.

Transformed data becomes the source that powers dashboards, notebooks, machine learning models, and application features that ultimately help businesses make money and stay competitive.

The Transformation Process

The journey from raw data to refined/useful data typically goes like this:

Raw data is collected from source systems such as website traffic, product usage, CRM activity, or IoT devices.

The data lands in a data warehouse or lake, such as Snowflake or S3, via an ingestion process. This could be batch (a command that copies the data once an hour, for example), or streaming (a continuous flow of raw data into storage). The data is still raw at this point, but its in a place where we can start to transform it.

The raw data is cleaned and standardized — handling missing values, deduplicating records, standardizing formats, masking or removing sensitive information, etc.

The cleaned data is structured and modeled in a way that matches business concepts — Clickstream data, for example (those log lines shown above) can be structured into sessions, which help paint a clearer picture of what the user did while on the website. This stage is where tables like

customers,products, andorderswould be created or updated.The structured data is then enriched with derived columns (new table columns that are not in the raw data, but can be easily calculated and add value to the dataset). The data can also be further enriched by joining data from other tables.

Finally, the data is aggregated at appropriate levels. For example, data platforms often contain daily or hourly tables that can be queried to answer basic questions like, “How many sales did we make on March 3rd?”. Aggregated tables are more performant because you don’t need to query the entire sales table. Instead, you can filter the query by a data partition.

This foundation in data transformation is essential for understanding why dbt has become so popular, especially for steps 4, 5, and 6.

About dbt

dbt is an acronym: data build tool. The project started to form in 2015 when some people from RJMetrics were fed up with the problems analysts were facing: data analysts operating in isolation, outside the silos of traditional software engineers.

dbt wanted to treat “analytic code” — could be SQL, Python, Java, whatever — the same as other software code, and follow the same collaborative practices that software engineers do:

keep their code in source control (e.g., git)

do code reviews with teammates and stakeholders

write tests for quality assurance

write and read documentation (breaking that isolation of knowledge)

a dynamic single source of truth — leveraging the dataset’s schema as it’s public interface so that two people doing the same analysis don’t end up with different results

an environment that isn’t production — a place where analysts can work without impacting users

reduce manual toil by using tools to automate repetitive tasks

So, a group of people formed dbt Labs and, over about 10 years, it has evolved into an industry standard tool that spawned into two products: dbt core (an open-source tool) and dbt cloud (a hosted platform geared towards businesses).

I’ll mostly be focusing on dbt core in the rest of this post. There is so much to learn! Let’s get it installed and try to get a basic workflow up and running.

Getting Started

I’m going to use dbt’s Quickstart for dbt Core using DuckDB to try out the CLI and explore some of the features.

DuckDB is a lightweight database management system that is fast, easy to install, and a perfect tool for a demo that we can run on our machines.

The well-intended folks at dbt labs made this GitHub repo to get started with dbt in “less than a minute” by running a few simple commands. To me, this is a little too fast. So I am going to take my time and see what’s happening under the hood.

The Project

A dbt project is a directory that is structured in a particular way to give the tool context about your… project… and how to build your data sets. At minimum, it needs a dbt_project.yml file at the base of the repo.

dbt_project.yml

I’m not going to dive into all of the specific configurations you can set for your project. You can check out this doc page if you want to go down the rabbit hole. Here’s my brief synopsis:

dbt will automatically look for a file called

dbt_project.yml, recursively, but you can specify the location with a flag or environment variable.dbt demarcates between a folder name and a configuration by using a

+prefix before the configuration namethere are some tricky naming conventions — using dashes (-) when configuring resource types with multiple words, and using underscores (_) when configuring resource types with multiple words for yaml files other than dbt_project.yml — Modern IDEs can help correct you as you write these yaml files, though.

Here’s an example of the contents of a dbt project config file:

name: jaffle_shop

config-version: 2

version: "0.1"

profile: "jaffle_shop"

model-paths: ["models"]

seed-paths: ["seeds"]

test-paths: ["tests"]

analysis-paths: ["analysis"]

macro-paths: ["macros"]

target-path: "target"

clean-targets:

- "target"

- "dbt_modules"

- "logs"

require-dbt-version: [">=1.00", "<2.0.0"]

seeds:

+docs:

node_color: "#cd7f32"

models:

jaffle_shop:

+materialized: table

staging:

+materialized: view

+docs:

node_color: "silver"

+docs:

node_color: "gold"

Project Structure

The dbt docs have an entire section on how to structure your project directory. Here is what I took away from reading through this doc:

Directory structure is very important because dbt has certain expectations and flag that allow you do select which models you want to run based on the directory name and location. For example,

dbt build --select staging.stripe+would look for files in themodels/staging/stripedirectory.File names are also crucial, and the tool is built around certain naming conventions. For example, stg_google_analytics__campaigns.sql — the double underscore between source and entity helps see that we mean the campaigns entity from google analytics rather than the analytics campaigns from google.

Here’s an example of what my repo structure looks like:

.

├── dbt_project.yml

├── models

│ ├── customers.sql

│ ├── docs.md

│ ├── orders.sql

│ ├── overview.md

│ ├── schema.yml

│ └── staging

│ ├── schema.yml

│ ├── stg_customers.sql

│ ├── stg_orders.sql

│ └── stg_payments.sql

├── pyproject.toml

├── README.md

├── requirements.txt

├── seeds

│ ├── raw_customers.csv

│ ├── raw_orders.csv

│ └── raw_payments.csv

└── uv.lockModels

As I'm reading through the dbt docs I often come across words that I don't fully grasp. One of them is "models."

In dbt terminology, a model is specifically a SQL query that's defined in a .sql file within your dbt project. When you run dbt build, these SQL queries get executed against your data warehouse to create tables or views. The output object (the table or view) is also commonly referred to as a model.

What makes models different from raw data tables is that models:

Are defined and version-controlled in your dbt project

Can reference other models using the ref() function

Can be materialized in different ways (as views, tables, incremental tables, etc.)

Are typically transformations of raw data or other models

Raw data that's loaded into your warehouse through other processes isn't considered a model in dbt until you create a SQL transformation for it. Even seed data (those CSV files) aren't models - they're "seeds" in dbt terminology, though they can be referenced by models.

Models typically follow a pattern moving from raw data toward business-ready data:

Staging models: Clean and standardize raw data (prefix: stg_)

Intermediate models: Join and transform data from multiple sources

Mart models: Business-entity focused models ready for consumption

So while the end result is indeed a table or view in your database, a "model" in dbt encompasses both the SQL definition and the resulting database object that was created through the dbt workflow.

Jinja templating

When examining an dbt project, you will see jinja template code written in SQL and markdown files. It might be confusing at first, because a .sql file with something like {{ ref(‘stg_orders’) }} is not valid SQL syntax. But don’t worry, dbt is going to handle that, and compile it all down into valid SQL (or markdown for docs).

Being able to use jinja opens up many doors that allow you to accomplish things you couldn’t with just SQL.

Control structures (if statements and loops)

Accessing environment variables

Macros

Speaking of {{ ref(‘stg_orders’) }} — that’s a macro. Jinja macros are essentially functions. They are pieces of code that can be reused multiple times. They are typically saved in .sql files in a directory called macros.

Developers can create packages and share their macros with other developers. The most popular one is the dbt-utils package, which contains many useful macros, written by dbt experts.

Side note: dbt hub is a repository, kinda like Docker hub, where people in the community can share dbt packages.

Building the dbt project

At this point of my study, I have created the files in my repo, installed my python dependencies (e.g., dbt, duckdb) in a virtual environment. Now what? Time to turn all of our psuedo-SQL code into SQL and build our models.

# Run all seeds, models, snapshots, and tests in DAG order

$ dbt build --log-level debug

Usage: dbt build [OPTIONS]

Try 'dbt build -h' for help.

Error: Invalid value for '--profiles-dir': Path '/Users/lee/.dbt' does not exist.Looks like dbt expects a ~/.dbt directory to exist. I installed dbt within a virtual environment, not on my system OS.

We need to add a profile. Profiles tell dbt how to connect to your data warehouse.

Here’s mine, which uses duckdb as the warehouse:

jaffle_shop: # Name of the profile

target: dev # See line 4

outputs:

dev: # This is the dev "target"

type: duckdb # Type of warehouse

path: "jaffle_shop.duckdb"

threads: 24 # The number of threads the dbt project will run onBy the way, this file should not be checked in to version control (hence the default to ~/.dbt) if it contains sensitive information such as database connection strings, but you can use environment variables to get around that.

Ok, let’s try to build again. Here’s the full output which looks better in the terminal.

$ dbt build

00:02:50 Running with dbt=1.9.4

00:02:50 Registered adapter: duckdb=1.9.3

00:02:51 Found 5 models, 3 seeds, 20 data tests, 428 macros

00:02:51

00:02:51 Concurrency: 24 threads (target='dev')

00:02:51

00:02:51 1 of 28 START seed file main.raw_customers ..................................... [RUN]

00:02:51 3 of 28 START seed file main.raw_payments ...................................... [RUN]

00:02:51 2 of 28 START seed file main.raw_orders ........................................ [RUN]

00:02:51 1 of 28 OK loaded seed file main.raw_customers ................................. [INSERT 100 in 0.03s]

00:02:51 3 of 28 OK loaded seed file main.raw_payments .................................. [INSERT 113 in 0.03s]

00:02:51 2 of 28 OK loaded seed file main.raw_orders .................................... [INSERT 99 in 0.03s]

00:02:51 4 of 28 START sql view model main.stg_customers ................................ [RUN]

00:02:51 6 of 28 START sql view model main.stg_orders ................................... [RUN]

00:02:51 5 of 28 START sql view model main.stg_payments ................................. [RUN]

00:02:51 6 of 28 OK created sql view model main.stg_orders .............................. [OK in 0.04s]

00:02:51 4 of 28 OK created sql view model main.stg_customers ........................... [OK in 0.04s]

00:02:51 5 of 28 OK created sql view model main.stg_payments ............................ [OK in 0.04s]

00:02:51 9 of 28 START test unique_stg_orders_order_id .................................. [RUN]

00:02:51 7 of 28 START test accepted_values_stg_orders_status__placed__shipped__completed__return_pending__returned [RUN]

00:02:51 8 of 28 START test not_null_stg_orders_order_id ................................ [RUN]

00:02:51 11 of 28 START test unique_stg_customers_customer_id ........................... [RUN]

00:02:51 10 of 28 START test not_null_stg_customers_customer_id ......................... [RUN]

00:02:51 14 of 28 START test unique_stg_payments_payment_id ............................. [RUN]

00:02:51 12 of 28 START test accepted_values_stg_payments_payment_method__credit_card__coupon__bank_transfer__gift_card [RUN]

00:02:51 13 of 28 START test not_null_stg_payments_payment_id ........................... [RUN]

00:02:51 9 of 28 PASS unique_stg_orders_order_id ........................................ [PASS in 0.05s]

00:02:51 8 of 28 PASS not_null_stg_orders_order_id ...................................... [PASS in 0.05s]

00:02:51 7 of 28 PASS accepted_values_stg_orders_status__placed__shipped__completed__return_pending__returned [PASS in 0.05s]

00:02:51 10 of 28 PASS not_null_stg_customers_customer_id ............................... [PASS in 0.05s]

00:02:51 11 of 28 PASS unique_stg_customers_customer_id ................................. [PASS in 0.05s]

00:02:51 14 of 28 PASS unique_stg_payments_payment_id ................................... [PASS in 0.05s]

00:02:51 13 of 28 PASS not_null_stg_payments_payment_id ................................. [PASS in 0.05s]

00:02:51 12 of 28 PASS accepted_values_stg_payments_payment_method__credit_card__coupon__bank_transfer__gift_card [PASS in 0.05s]

00:02:51 15 of 28 START sql table model main.customers .................................. [RUN]

00:02:51 16 of 28 START sql table model main.orders ..................................... [RUN]

00:02:51 15 of 28 OK created sql table model main.customers ............................. [OK in 0.02s]

00:02:51 16 of 28 OK created sql table model main.orders ................................ [OK in 0.02s]

00:02:51 18 of 28 START test unique_customers_customer_id ............................... [RUN]

00:02:51 17 of 28 START test not_null_customers_customer_id ............................. [RUN]

00:02:51 28 of 28 START test unique_orders_order_id ..................................... [RUN]

00:02:51 19 of 28 START test accepted_values_orders_status__placed__shipped__completed__return_pending__returned [RUN]

00:02:51 20 of 28 START test not_null_orders_amount ..................................... [RUN]

00:02:51 21 of 28 START test not_null_orders_bank_transfer_amount ....................... [RUN]

00:02:51 22 of 28 START test not_null_orders_coupon_amount .............................. [RUN]

00:02:51 23 of 28 START test not_null_orders_credit_card_amount ......................... [RUN]

00:02:51 24 of 28 START test not_null_orders_customer_id ................................ [RUN]

00:02:51 25 of 28 START test not_null_orders_gift_card_amount ........................... [RUN]

00:02:51 26 of 28 START test not_null_orders_order_id ................................... [RUN]

00:02:51 27 of 28 START test relationships_orders_customer_id__customer_id__ref_customers_ [RUN]

00:02:51 18 of 28 PASS unique_customers_customer_id ..................................... [PASS in 0.05s]

00:02:51 17 of 28 PASS not_null_customers_customer_id ................................... [PASS in 0.05s]

00:02:51 28 of 28 PASS unique_orders_order_id ........................................... [PASS in 0.05s]

00:02:51 20 of 28 PASS not_null_orders_amount ........................................... [PASS in 0.05s]

00:02:51 22 of 28 PASS not_null_orders_coupon_amount .................................... [PASS in 0.05s]

00:02:51 19 of 28 PASS accepted_values_orders_status__placed__shipped__completed__return_pending__returned [PASS in 0.05s]

00:02:51 21 of 28 PASS not_null_orders_bank_transfer_amount ............................. [PASS in 0.05s]

00:02:51 24 of 28 PASS not_null_orders_customer_id ...................................... [PASS in 0.05s]

00:02:51 26 of 28 PASS not_null_orders_order_id ......................................... [PASS in 0.05s]

00:02:51 23 of 28 PASS not_null_orders_credit_card_amount ............................... [PASS in 0.05s]

00:02:51 25 of 28 PASS not_null_orders_gift_card_amount ................................. [PASS in 0.05s]

00:02:51 27 of 28 PASS relationships_orders_customer_id__customer_id__ref_customers_ .... [PASS in 0.05s]

00:02:51

00:02:51 Finished running 3 seeds, 2 table models, 20 data tests, 3 view models in 0 hours 0 minutes and 0.26 seconds (0.26s).

00:02:51

00:02:51 Completed successfully

00:02:51

00:02:51 Done. PASS=28 WARN=0 ERROR=0 SKIP=0 TOTAL=28The build command does a lot of things: discovers the seed data, loads it into a table, creates views, runs tests, and generates files.

After running that command, the dbt created a directory called target.

target

├── compiled

│ └── jaffle_shop

│ └── models

│ ├── customers.sql

│ ├── orders.sql

│ ├── schema.yml

│ │ ├── accepted_values_orders_1ce6ab157c285f7cd2ac656013faf758.sql

│ │ ├── not_null_customers_customer_id.sql

│ │ ├── not_null_orders_amount.sql

│ │ ├── not_null_orders_bank_transfer_amount.sql

│ │ ├── not_null_orders_coupon_amount.sql

│ │ ├── not_null_orders_credit_card_amount.sql

│ │ ├── not_null_orders_customer_id.sql

│ │ ├── not_null_orders_gift_card_amount.sql

│ │ ├── not_null_orders_order_id.sql

│ │ ├── relationships_orders_customer_id__customer_id__ref_customers_.sql

│ │ ├── unique_customers_customer_id.sql

│ │ └── unique_orders_order_id.sql

│ └── staging

│ ├── schema.yml

│ │ ├── accepted_values_stg_orders_4f514bf94b77b7ea437830eec4421c58.sql

│ │ ├── accepted_values_stg_payments_c7909fb19b1f0177c2bf99c7912f06ef.sql

│ │ ├── not_null_stg_customers_customer_id.sql

│ │ ├── not_null_stg_orders_order_id.sql

│ │ ├── not_null_stg_payments_payment_id.sql

│ │ ├── unique_stg_customers_customer_id.sql

│ │ ├── unique_stg_orders_order_id.sql

│ │ └── unique_stg_payments_payment_id.sql

│ ├── stg_customers.sql

│ ├── stg_orders.sql

│ └── stg_payments.sql

├── graph_summary.json

├── graph.gpickle

├── manifest.json

├── partial_parse.msgpack

├── run

│ └── jaffle_shop

│ ├── models

│ │ ├── customers.sql

│ │ ├── orders.sql

│ │ ├── schema.yml

│ │ │ ├── accepted_values_orders_1ce6ab157c285f7cd2ac656013faf758.sql

│ │ │ ├── not_null_customers_customer_id.sql

│ │ │ ├── not_null_orders_amount.sql

│ │ │ ├── not_null_orders_bank_transfer_amount.sql

│ │ │ ├── not_null_orders_coupon_amount.sql

│ │ │ ├── not_null_orders_credit_card_amount.sql

│ │ │ ├── not_null_orders_customer_id.sql

│ │ │ ├── not_null_orders_gift_card_amount.sql

│ │ │ ├── not_null_orders_order_id.sql

│ │ │ ├── relationships_orders_customer_id__customer_id__ref_customers_.sql

│ │ │ ├── unique_customers_customer_id.sql

│ │ │ └── unique_orders_order_id.sql

│ │ └── staging

│ │ ├── schema.yml

│ │ │ ├── accepted_values_stg_orders_4f514bf94b77b7ea437830eec4421c58.sql

│ │ │ ├── accepted_values_stg_payments_c7909fb19b1f0177c2bf99c7912f06ef.sql

│ │ │ ├── not_null_stg_customers_customer_id.sql

│ │ │ ├── not_null_stg_orders_order_id.sql

│ │ │ ├── not_null_stg_payments_payment_id.sql

│ │ │ ├── unique_stg_customers_customer_id.sql

│ │ │ ├── unique_stg_orders_order_id.sql

│ │ │ └── unique_stg_payments_payment_id.sql

│ │ ├── stg_customers.sql

│ │ ├── stg_orders.sql

│ │ └── stg_payments.sql

│ └── seeds

│ ├── raw_customers.csv

│ ├── raw_orders.csv

│ └── raw_payments.csv

├── run_results.json

└── semantic_manifest.json

14 directories, 59 files

In this directory you can see the compiled collection of SQL files, along with the source files, and things like the dependency graph and manifests.

Now this is where things get exciting. Let’s look at what dbt build did to our database.

$ duckcli jaffle_shop.duckdb

Version: 0.2.1

GitHub: https://github.com/dbcli/duckcli

jaffle_shop.duckdb> show tables;

+---------------+

| name |

+---------------+

| customers |

| orders |

| raw_customers |

| raw_orders |

| raw_payments |

| stg_customers |

| stg_orders |

| stg_payments |

+---------------+There are our tables and models! Notice how it prefixed `raw_` to our seed data.

jaffle_shop.duckdb> show table customers; -- snipped the other columns

+-------------------------+

| column_name |

+-------------------------+

| customer_id |

| first_name |

| last_name |

| first_order |

| most_recent_order |

| number_of_orders |

| customer_lifetime_value |

+-------------------------+We can see how the columns of the customers table maps to the final result defined in models/customers.sql:

final as (

select

customers.customer_id,

customers.first_name,

customers.last_name,

customer_orders.first_order,

customer_orders.most_recent_order,

customer_orders.number_of_orders,

customer_payments.total_amount as customer_lifetime_value

from customers

...

)Summary

I can see how this tool would improve organizations that have many people sharing a data warehouse. Not that I can really speak on the struggles of an analyst, but I can understand how using jinja and macros would feel empowering. The tool is quite robust, the documentation is pretty good, and it does have wide adoption. Oh, and its open source :)

If you made it this far, thanks for bearing with me. I hope you learned something. I put all the code in a GitHub repo.